The Surreal Reality of War

Most Arabic markets can be described as vivid, colorful and vibrant. Not so the Souk in Aleppo, Syria. Destroyed by the horrific war it is now an example for the surreal reality of war.

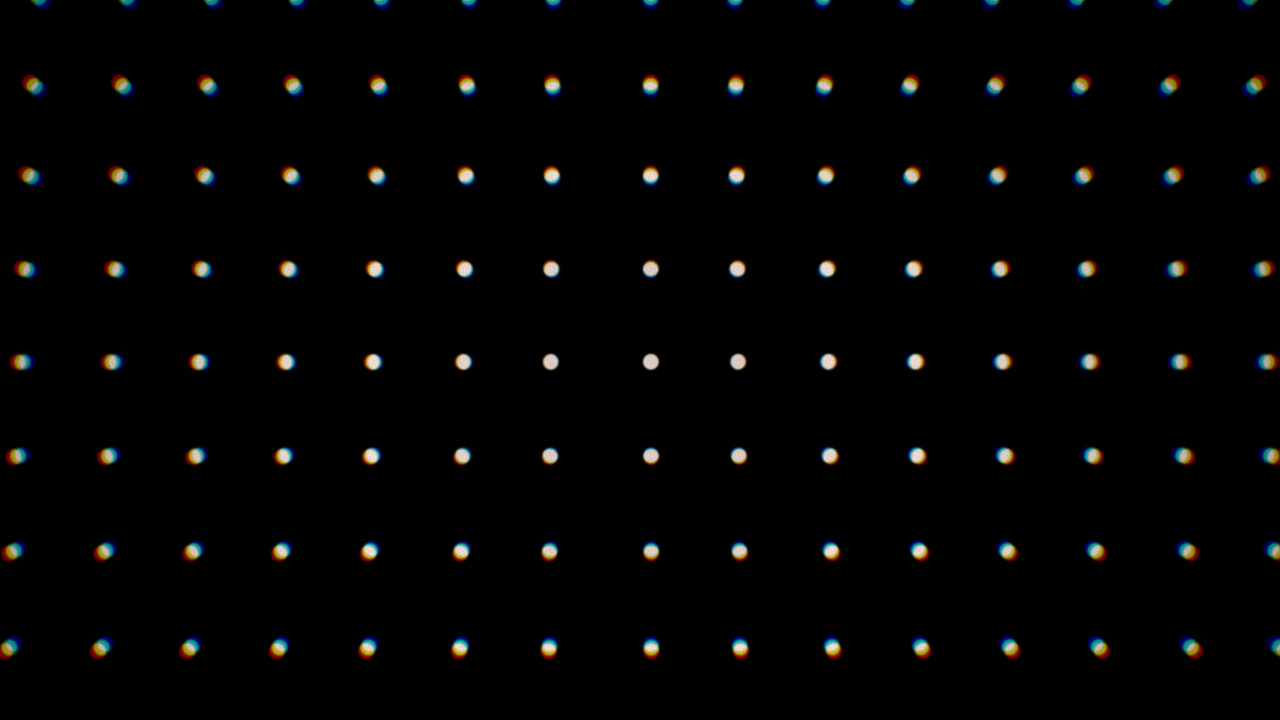

The motivational image is based on a Video from Eva zu Beck, who visited Aleppo in September 2019. I've edited the image, to add light rays and chromatic aberration.

The narrow cobblestone road has a metal sheeting roof, scattered with bullet holes, which cast light rays into the slightly hazy atmosphere. The doors to the shops are shut, rubble is piled up in front of them. At the end of the road we can see the unsharp outlines of two adults and a young child. Towards the corners of the image, a strong chromatic aberration contributes to the surreality of the image, giving the viewer an uncomfortable, nauseating feeling.